A Visual Chronology of Robots

I was just updating my official resume, and felt like making a more fun version. Here are all of the robots that I’ve worked with and could find pictures of. In chronological order:

Bob was Team Caltech’s entry for the 2004 DARPA Grand Challenge, which was a race across the desert for fully autonomous vehicles. I wasn’t involved in that competition, but we used the vehicle for a course project and in early preparations for the 2005 DGC while waiting for Alice to be completed.

In 2005, I worked on a PID controller that calculated the appropriate steering and throttle/brake commands to keep Bob/Alice on the desired path. When I started working on this project, I’d assumed that the control theory would be the tricky part. Instead, I wound up spending most of my efforts characterizing/negotiating the interfaces. If new paths generated by the planner weren’t continuous with the robot’s current state, no amount of tuning the gains could fix the vehicle’s overall performance. This is a tricky balance of how far in front of the vehicle a trajectory should remain fixed: too short, and you get jerky control, too far, and your vehicle won’t be able to a avoid newly-detected obstacle. Similarly, if my output steering commands were too high frequency, we’d blow up the steering pump. Repeatedly. This led to negotiations with the vehicle team over how much responsiveness was worth sacrificing to protect the hardware.

Launching for the 2005 DARPA Grand Challenge

(Alice! Once you have a Bob, the next one has to be Alice.)

Launching for the 2005 DARPA Grand Challenge

(Alice! Once you have a Bob, the next one has to be Alice.)

Alice was Team Caltech’s entry for the 2005 DARPA Grand Challenge, which was another desert race, and for the 2007 DARPA Urban Challenge, which required following traffic laws while interacting with other cars/robots.

In 2007, my main project was a module that would use the 4 bumper-mounted lidars to track moving vehicles. I did this by segmenting the scan based on discontinuities, looking for L shaped segments in the 2D scan, and then associating nearby segments in sequential scans. By looking at the direction the segment was moving, I could determine which corner we were tracking, and use the corner coordinates for a more accurate representation of the vehicle’s location. I used a simple Kalman Filter to predict the motion of the vehicles when occluded, and even reassociate them with an existing track when they reappeared. The basic algorithm design was sound, but I struggled with the implementation, spending way too much time debugging. This experience made me realize that I needed to become a better programmer if I wanted to be a roboticist.

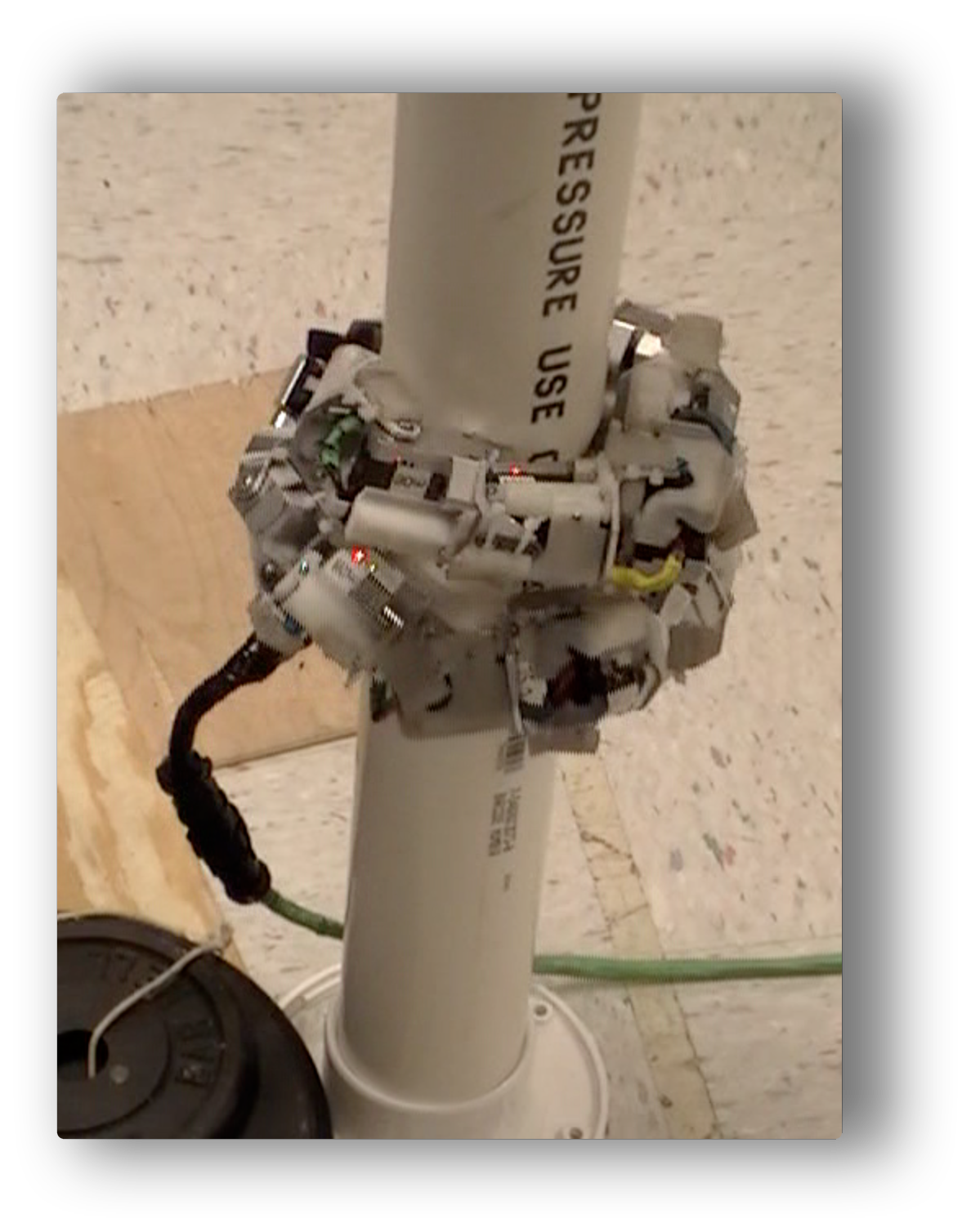

They can also climb legs.

They can also climb legs.

Snake robots - used for a course project on reinforcement learning, where we attempted to learn gait parameters that would lead to more efficient locomotion.

(image from HERB’s facebook page )

HERB! I had a summer internship at Intel Research, working with their Home Exploring Robotic Butler.

My first project was to determine the relative calibration between a spinning laser scanner and a camera and use that to generate colorized point clouds. Once everything was calibrated, I designed an algorithm that used Markov Random Fields to predict the occupancy status of occluded areas. In other words, I was trying to predict that a glass is circular after only having seen the front of it, which is an important capability if you want your robot to be able to manipulate previously-unmodeled objects.

(Kuka youBot)

(Kuka youBot)

I attended the 4th BRICS research camp on robot software architectures, where we used these in the context of a navigation task. The goal was to develop an architecture that would allow the robot to switch between navigation by following waypoints (whose relative spatial layout could and would change) and navigation in gridmaps with a fixed geometry. I wrote a state machine that was in charge of switching between the different navigation modes, and a controller that followed waypoints in the order specified, with the constraint that the next one in the list had to be visible from the current one.

(LAGR)

(LAGR)

I was the technical lead for CMU’s entry into the TARDEC CANINE challenge. This was essentially a competition for robots to play fetch in the presence of moving obstacles. I designed the architecture that we used, and wrote the state machine that handled high-level control at each stage of the challenge. I also used this robot for a research project that investigated using Monte-Carlo Tree Search to plan paths that minimized distance traveled while maximizing information gain.

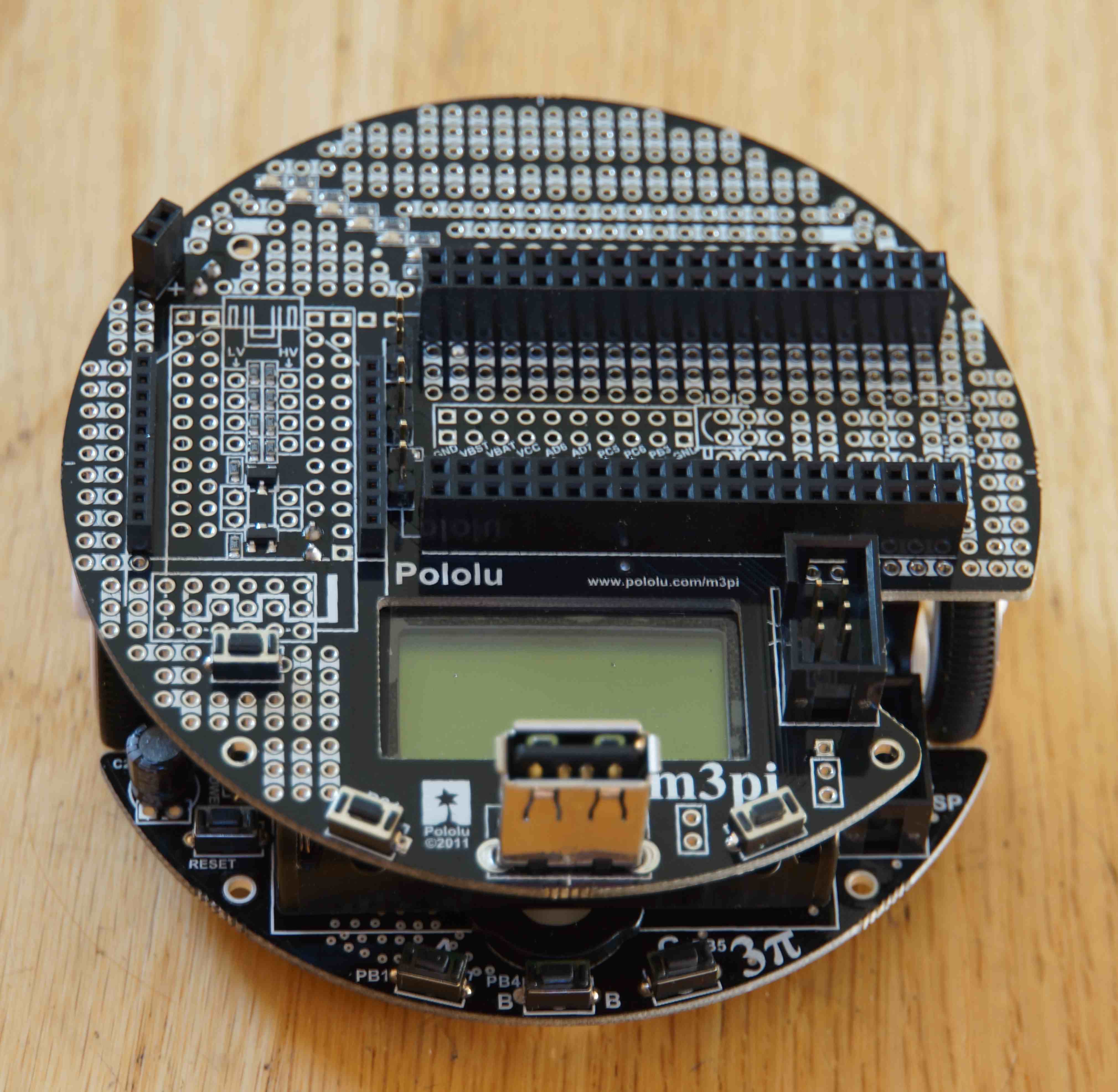

m3pi & Sphero - I wanted to play with robots at home and at Hacker School; this is what I could afford. I wrote a ROS driver for the m3pi, and put together a simple demo where it navigated between goal waypoints, using an overhead kinect to estimate its position.

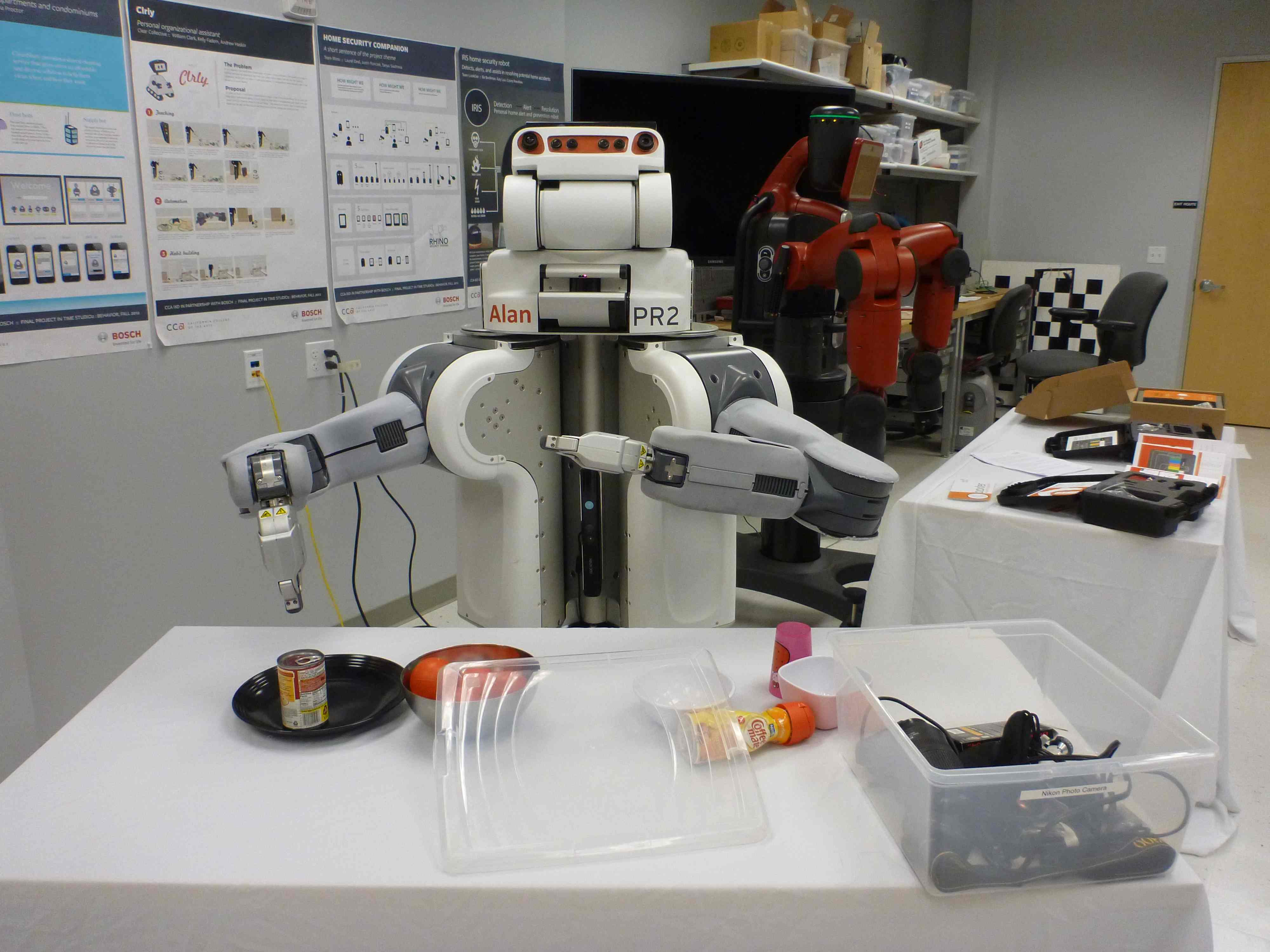

PR2! I had an internship at Bosch, working towards the far-off goal of having a robot clean up after me. Robot manipulation in clutter is an open problem; we hypothesized that if a human provided guidance for the initial segmentation, the rest of the task might become tractable. I designed and implemented a modular architecture for shared autonomy in the context of table clearing, with interchangeable human interfaces and segmentation algorithms.

Baxter! Also at Bosch. I didn’t use this robot for research, but it was involved in a prank.

(image from Google’s blog post)

(image from Google’s blog post)

Google car! Best internship ever. Some of the stuff I worked on even wound up in the video they released.

Artemis! This was a hover-capable autonomous underwater vehicle built by Stone Aerospace in order to explore underneath the McMurdo Ice Shelf. In this picture, we're testing out at Lake Travis. One of my colleagues is hanging out in a kayak next to Artemis, trying to keep motorboats from running over it while we test. I worked on the programming team, which included everything from writing hardware drivers to designing and implementing the high-level state machine that was used to implement mission scripts and seamlessly switch between manual and autonomous control.